When it comes to virtualization, many administrators still believe that NFS is not suitable for serious enterprise workloads and that protocols like iSCSI or Fibre Channel are required to achieve acceptable performance.

While this assumption may have been true years ago, modern infrastructure tells a very different story. With fast networking becoming the norm, protocol efficiency and latency matter far more than raw bandwidth alone.

NFS is often underestimated due to outdated assumptions or suboptimal configurations. In many Proxmox environments, NFSv3 is still used by default, which often leads to unnecessary performance limitations.

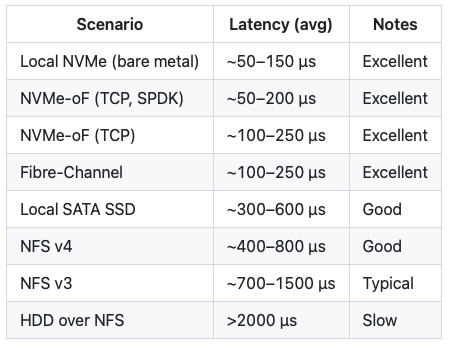

Latencies by Storage Type

Before comparing NFSv3 and NFSv4 directly, it is useful to establish a general baseline of latency expectations across different storage technologies commonly used with Proxmox.

This overview helps put the later benchmark results into perspective and shows where NFS typically sits compared to block-based and NVMe-backed solutions.

NFSv4 Advantages Over NFSv3

NFSv4 introduces several architectural and protocol-level improvements that directly affect latency, scalability, and overall efficiency in virtualized environments.

Unlike NFSv3, which is largely stateless and limited in concurrency, NFSv4 is designed to better utilize modern CPUs and high-speed networks.

- nconnect support for multiple TCP connections per mount

- pNFS (Parallel NFS) for direct client-to-storage data paths

- Stateful protocol with improved locking and recovery behavior

- More efficient metadata and attribute handling

When properly configured, these features make NFSv4 a strong choice for latency-sensitive workloads such as databases, CI pipelines, and machine learning tasks on Proxmox.

Test Setup

To ensure meaningful and reproducible benchmark results, all tests were conducted using a fixed and clearly defined hardware and software configuration.

The following sections outline the virtual machine, Proxmox node, and storage server used during testing.

Virtual Machine

The virtual machine configuration was intentionally kept minimal to reduce guest-side overhead and focus on storage latency.

- Operating System: Minimal Debian

- vCPU: 4

- Memory: 4 GB

- Disk Controller: VirtIO SCSI Single

- Network: 2 × 2.5 Gbit

Proxmox Node

This Proxmox VE node represents a realistic modern homelab or edge deployment with sufficient CPU and network capacity.

- System: Ace Magician AM06 Pro

- Operating System: Proxmox VE 8.4.1

- CPU: AMD Ryzen 5 5500U

- Memory: 64 GB DDR4

- Network: 2 × Intel I226-V 2.5 Gbit

Storage Server

The storage backend is NVMe-based and intentionally disk-bound to highlight protocol efficiency rather than raw hardware limitations.

- System: GMKtec G9 NAS

- Operating System: FreeBSD 14.3

- CPU: Intel N150

- Memory: 12 GB DDR5

- Storage: 2 × WD Black SN7100 NVMe (ZFS Mirror)

- Network: 2 × Intel I226-V 2.5 Gbit

NFSv3 Random Read Benchmark

This benchmark measures random 4K read latency using NFSv3 with a single job and minimal queue depth to highlight protocol overhead.

fio --rw=randread --name=IOPS-read --bs=4k --direct=1 --filename=/dev/sda \

--numjobs=1 --ioengine=libaio --iodepth=1 --refill_buffers --group_reporting \

--runtime=60 --time_based

This fio command performs random 4 K reads using direct I/O to bypass the page cache and expose raw storage and protocol latency.

By using a single job and an I/O depth of one, the test intentionally models latency-sensitive workloads such as database queries, VM boot operations, or synchronous metadata access.

IOPS-read: read: IOPS=1641, BW=6566KiB/s, lat avg=607.07µs

clat percentiles: 50% = 644µs, 95% = 758µs, 99.99% = 1942µs

Disk utilization: 96.42%

With NFSv3, the workload reaches approximately 1,641 IOPS and an average latency of around 607 µs. The median latency already exceeds the average, indicating noticeable protocol overhead even under light concurrency. Higher tail latencies at the 95th and 99.99th percentiles further show increased variance, while disk utilization above 96 % confirms that the backend is effectively saturated.

NFSv4 Random Read Benchmark

The same workload was repeated using NFSv4.2 to ensure a fair and directly comparable latency measurement.

fio --rw=randread --name=IOPS-read --bs=4k --direct=1 --filename=/dev/sda \

--numjobs=1 --ioengine=libaio --iodepth=1 --refill_buffers --group_reporting \

--runtime=60 --time_based

All parameters were kept identical to the NFSv3 test, ensuring that any observed differences are caused solely by the protocol itself. This makes the results directly comparable and eliminates variables such as caching effects, queue depth differences, or workload skew.

IOPS-read: read: IOPS=2377, BW=9509KiB/s, lat avg=419.05µs

clat percentiles: 50% = 379µs, 95% = 545µs, 99.99% = 1680µs

Disk utilization: 95.84%

Under NFSv4.2, the same workload achieves 2,377 IOPS while reducing average latency to approximately 419 µs. Both median and tail latencies improve significantly, indicating more predictable and responsive read behavior. Despite being network-backed storage, disk utilization remains high, demonstrating that NFSv4.2 efficiently utilizes the storage backend without becoming protocol-bound.

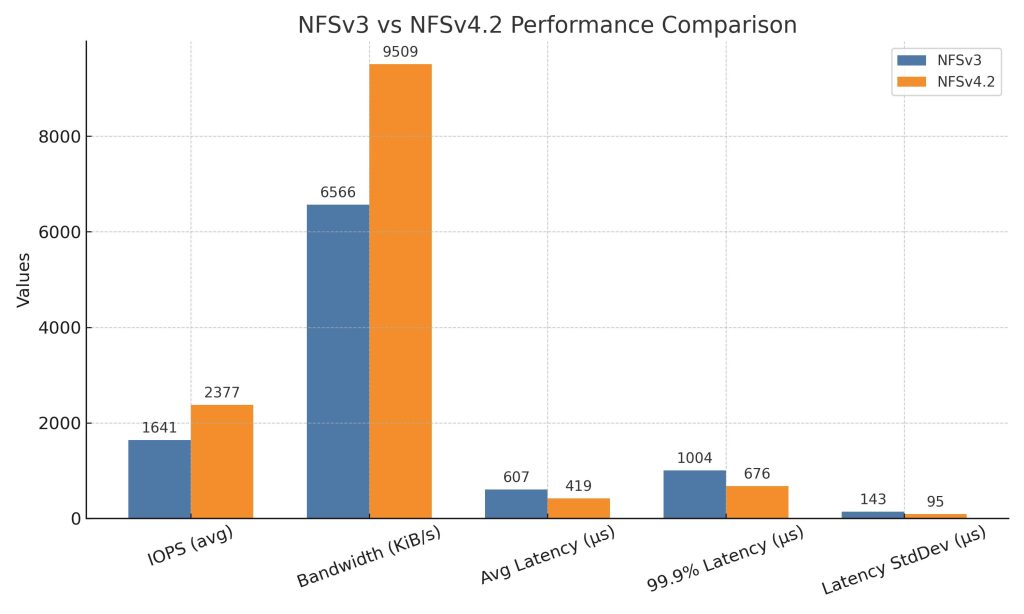

Latency Comparison

The following table summarizes the most relevant performance and latency metrics observed during testing.

Metric NFSv3 NFSv4.2 Notes

---------------------------------------------------------------

IOPS (avg) 1641 2377 ~45% higher

Bandwidth (avg) 6566 KiB/s 9509 KiB/s ~45% higher

Completion Latency 597.54µs 411.62µs ~31% lower

Total Latency 607.07µs 419.05µs Faster response

Max Latency 4319µs 3057µs ~30% lower

CPU Usage (sys) 2.85% 3.37% Slight increase

Disk Utilization 96.42% 95.84% Disk-bound

The results clearly demonstrate that NFSv4.2 provides significantly lower and more consistent latency while delivering substantially higher throughput.

Conclusion

NFSv4.2 clearly outperforms NFSv3 on Proxmox, offering approximately 45% higher IOPS and throughput alongside lower and tighter latency distributions.

While CPU usage increases slightly, the trade-off is more than justified by the performance gains. For most Proxmox deployments, NFSv4 should be the default choice unless there is a specific legacy requirement for NFSv3.

Although technologies such as NVMe-oF or SPDK can push performance even further, NFSv4 remains a simple, well-integrated, and highly effective storage protocol for Proxmox clusters that value predictable latency and ease of deployment.