Virtualization in 2024: Market Trends, Open-Source Opportunities, Broadcom’s VMware Shake-Up, and Top Solutions to Watch

The year 2024 marked a turning point for the virtualization industry. Following Broadcom’s acquisition of VMware, significant changes to VMware’s licensing model and pricing structure set off a wave of responses across the market. These changes had a profound impact on the virtualization landscape, altering long-held assumptions and forcing enterprises to re-evaluate their strategies.

VMware had long been a leader in the virtualization space, with its solutions forming the backbone of countless enterprise data centers. Its platforms, including vSphere, vSAN, and NSX, were considered industry standards, praised for their robustness and feature set. However, post-acquisition, VMware’s licensing and pricing underwent substantial revisions. For many organizations, these changes represented a significant cost increase, leading to concerns about the sustainability of continuing with VMware’s solutions.

The updates, while intended to align with Broadcom’s broader enterprise strategy, prompted businesses to reexamine their dependence on proprietary virtualization platforms. This shift created ripple effects, opening doors to new and alternative solutions.

The virtualization market had long been viewed as mature and stable. Many believed that innovation in hypervisors and virtualization platforms had plateaued, with attention shifting instead to containerization technologies such as Kubernetes. Virtualization, it seemed, had become a foundational layer – a commodity rather than a battleground for innovation.

This perspective began to change in the beginning of 2024. The disruptions introduced by VMware’s pricing strategy revealed an appetite for greater flexibility, cost-effectiveness, and vendor independence. As a result, many enterprises began to explore alternatives, breathing new life into the virtualization market.

The Rise of Open-Source Solutions

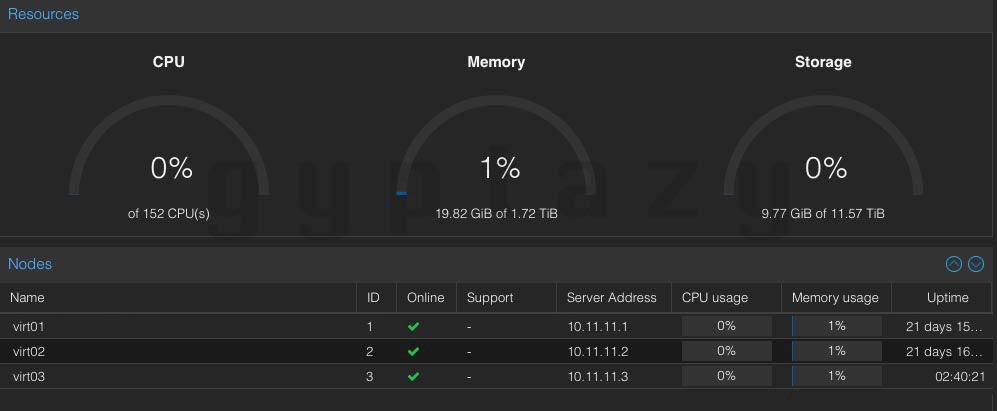

One of the most notable shifts during this period was the surge in interest in open-source virtualization solutions. Projects like Proxmox VE (based on KVM), XCP-ng (based on Xen) including Orchestra and oVirt gained traction as organizations sought cost-effective alternatives to proprietary platforms but also other solutions like SUSE Harvester, Open-Stack, Open-Nebula or bhyve based solutions like ClonOS and bhyve-webadmin gained more popularity. In the links you can find more insights about the products during my evaluations and tests and in the end – it’s a huge benefit for all of these projects! However, open-source solutions, long overshadowed by VMware and other commercial offerings, began to emerge as serious contenders, appealing to enterprises with their lower costs, community-driven innovation, and absence of restrictive licensing terms.

This trend also intersected with the broader open-source movement, which had been gaining momentum in areas like cloud-native development and infrastructure automation. As businesses reconsidered their reliance on proprietary virtualization platforms, they increasingly turned to open-source tools as a way to regain control over their infrastructure and budgets.

Which Solutions Fits Best?

Almost every day, I’m asked, “What’s the best alternative to VMware?” And let me tell you right up front – there isn’t a direct, one-to-one replacement for VMware’s virtualization products. VMware’s suite, including vSphere, ESXi, and related tools, has set an industry standard with its depth, breadth, and enterprise-grade reliability. But don’t despair, there are numerous alternatives out there, each with its strengths, weaknesses, and ideal use cases. The right choice for your needs depends less on the features of the platform and more on how well it aligns with your goals, infrastructure, and expertise. But while a direct substitute may not exist, there are plenty of great alternatives – each with its own unique strengths, limitations, and nuances.

When exploring VMware alternatives, the first thing to acknowledge is that no single product will fit every situation perfectly. Many open-source platforms provide excellent virtualization solutions, but they come with their own sets of trade-offs. The question isn’t about finding the platform with the most features or the highest level of sophistication; it’s about identifying the solution that aligns most closely with your needs, environment, and expertise.

Among these alternatives, Proxmox is one of the most well-known and frequently discussed. Its blend of virtualization and containerization capabilities makes it a compelling option for small to medium-sized environments, particularly for users who value open-source software and don’t require extensive proprietary integrations. However, Proxmox isn’t a universal solution. For larger, more complex infrastructures or scenarios requiring high degrees of customization, other platforms might be a better fit. The key is understanding that no platform – including Proxmox – excels at everything. Choosing it simply because it’s popular or straightforward could lead to challenges in scaling or addressing specific corner cases in the long term. But I will cover this later in detail within this post.

The landscape of virtualization platforms ranges from tools that are refreshingly simple to others so complex they feel like lifelong projects. Some solutions offer a quick path to virtualization but may fall short in high-availability scenarios, while others deliver powerful performance but require a significant learning curve and considerable management overhead. This variation underscores the importance of taking a thoughtful, needs-first approach when evaluating your options.

Ultimately, selecting a VMware alternative is less about finding a “better” product and more about identifying the right product for your specific use case. Features are important, but they aren’t everything. The best choice is the one that integrates smoothly with your existing workflows, addresses your most critical requirements, and fits your scale and scope of your operations. While no alternative replicates VMware exactly, many provide a solid foundation for virtualization if approached with clear objectives and a realistic understanding of their capabilities. So let’s have a current look at the major open-source solutions in the virtualization market in more detail.

Proxmox

Proxmox is perhaps the most frequently mentioned alternative when discussing replacements for VMware’s virtualization platform. Its reputation is well-deserved, thanks to its ease of use, vibrant community, and the availability of numerous external tools that enhance its capabilities. For many users, especially in smaller environments, Proxmox offers an accessible and feature-rich entry point into virtualization without the heavy licensing costs associated with VMware.

In 2024, Proxmox introduced several notable updates that further solidify its appeal. The addition of nftables support in place of iptables modernized its networking capabilities, and the ESXi live import wizard simplified the migration of virtual machines from VMware, making transitions smoother than ever. The inclusion of Software Defined Networking (SDN) expanded its flexibility in managing virtualized networks, while updates to its ecosystem tools like Proxmox Backup Server and Ceph – now supporting Ceph Squid – enhanced its viability as a hyper-converged infrastructure (HCI) solution. These enhancements demonstrate Proxmox’s commitment to continuous improvement and its focus on addressing the needs of its users.

Other companies, such as Veeam, have recognized the growing adoption of Proxmox and extended their product support to include it. With Veeam’s integration, transitioning to Proxmox becomes significantly easier, as existing backup solutions can remain in place, eliminating the additional burden of switching to a new backup system and reducing the associated time, effort, and training costs.

However, while Proxmox is a fantastic choice for small to medium deployments, it may not scale effectively for larger or more complex environments. The platform relies on Corosync and its PMXCFS (Proxmox Cluster File System) for clustering, which can be challenging to manage in high-latency networks. Corosync, while robust, is highly sensitive to network latencies, making it unsuitable for large-scale clusters or multi-site setups without careful planning. This is one of the most common issues I encounter when helping users troubleshoot Proxmox clusters. Problems often arise when ring interfaces (used for cluster communication) share the same network paths as uplink or storage traffic, leading to performance bottlenecks and instability. Proxmox clusters with more than 20–25 nodes require advanced architectural considerations, and multi-site deployments are particularly difficult to implement without dedicated low-latency links, such as dark fibers.

Moreover, some features commonly expected in enterprise-grade virtualization platforms are still missing from Proxmox. Tools like Distributed Resource Scheduler (DRS) or Distributed Power Management (DPM), which are staples in the VMware ecosystem, are absent. For users who rely on automated workload balancing or energy efficiency optimizations, this can be a significant limitation. However, the flexibility of Proxmox’s ecosystem allows for custom solutions. For instance, my experience with free VPS hosting on BoxyBSD led me to create ProxLB, a tool that adds workload balancing to Proxmox clusters. While this is a testament to the adaptability of Proxmox, it highlights the gaps that still need to be filled to fully compete with VMware in larger deployments.

In summary, Proxmox is an excellent platform with a growing feature set and a strong community backing it. It’s well suited for small to medium environments and users who value open-source solutions which also supports top notch features and solutions like NVMe-oF (also on NetApp Storages) and other great features. However, its scaling limitations and missing enterprise-grade features mean that it’s not always the best choice for larger, more demanding use cases. Careful consideration of your infrastructure’s needs and limitations is essential when choosing Proxmox as a VMware alternative.

You can also find some more specific topics related to Proxmox from me right here:

- Migrating VMs from VMware ESXi to Proxmox

- Integrating Proxmox Backup Server into Proxmox Clusters

- NetApp Storage and NVMe-oF for Breakthrough Performance in Proxmox Virtualization Environments

- How and when to use Software-Defined Networks in Proxmox VE

- Veeam & Proxmox VE: Opportunities for Open-Source Virtualization

XCP-ng & Orchestra

XCP-ng, introduced in 2018, stands as a compelling Linux distribution centered on the Xen Project. Built to integrate a pre-configured Xen Hypervisor and Xen API (XAPI), it represents a seamless, open-source solution for virtualization needs. The project emerged from a fork of Citrix XenServer, which was open-sourced in 2013 but later transitioned away from being a truly free offering. With this shift, XCP-ng was born, rejuvenating the ethos of an accessible virtualization platform. By 2020, its credibility and importance were solidified as it joined the Linux Foundation under the aegis of the Xen Project.

At its core, XCP-ng is built on Xen, a lightweight microkernel hypervisor with an emphasis on security. Unlike full-fledged Linux kernels with millions of lines of code, Xen is streamlined to about 200,000 lines, making it inherently more stable and resilient to vulnerabilities. While this lean design ensures a highly secure and efficient architecture, it does limit hardware compatibility. However, XCP-ng supports most enterprise-grade hardware, which minimizes this concern for business users. Home lab enthusiasts, on the other hand, may face challenges when working with less common setups.

Architecturally, XCP-ng bears similarities to VMware’s virtualization stack, providing a familiar experience for users transitioning from enterprise-level solutions. However, it’s worth noting that XCP-ng deviates significantly from platforms like Proxmox, both in its approach and operational nuances. New users accustomed to Proxmox might need additional time and practice to grasp XCP-ng’s workflows, but this steep learning curve rewards them with a robust and reliable environment. And while I already wrote a post about XCP-ng 8.2, several things luckily changed and improved with the new XCP-ng 8.3 release:

One of XCP-ng’s distinctive features is its reliance on a management appliance, often referred to as Orchestra (short for Xen Orchestra). This virtual machine handles all management tasks within a cluster. While this centralized approach simplifies operations, it introduces potential challenges if the management VM becomes unavailable. Thankfully, running virtual machines on the hypervisor remains unaffected, even in such scenarios. For open-source enthusiasts, deploying Orchestra requires extra effort, such as building and maintaining the image independently. Establishing a CI/CD pipeline can simplify this process, though a commercial license streamlines deployment considerably.

With the release of version 8.3, XCP-ng has undergone significant enhancements, broadening its appeal and usability. A key addition is the introduction of the XO-Lite interface, a minimalistic web-based management tool available on all nodes. XO-Lite makes initial management and single-node setups more accessible. Other advancements include the ability to create IPv6-only cluster configurations, improved support for Windows 11 with virtual TPM (vTPM), and the inclusion of a largeblock storage driver. This driver mitigates limitations with 4K native disks by emulating a 512-byte block size, albeit with minor performance trade-offs.

The update also introduced PCI passthrough capabilities, a highly requested feature that is now seamlessly integrated into the Xen Orchestra interface. Users can exclude disks from virtual machine snapshots and designate specific networks for live migrations, particularly useful during host evacuations. These improvements showcase XCP-ng’s commitment to addressing user feedback and evolving needs, making it suitable for small, medium, and large-scale clusters alike.

Beyond its technical prowess, XCP-ng owes much of its success to the dedication of its community and leadership. Olivier Lambert, CEO of Vates, stands as a beacon of support and enthusiasm. His direct engagement with the community and willingness to provide free support highlight the project’s ethos of accessibility and collaboration. Lambert’s passion underscores the community-driven nature of XCP-ng, making it not just a tool but a collective endeavor.

XCP-ng is more than just an open-source hypervisor; it is a testament to the power of community-driven innovation. Its robust architecture, continuous improvements, and supportive ecosystem make it an excellent choice for those seeking a secure, efficient, and scalable (from small up to large scaled clusters) virtualization solution. Whether for enterprises or individuals, XCP-ng proves itself a worthy contender in the virtualization landscape.

bhyve in FreeBSD

In the realm of virtualization, the conversation often gravitates toward Linux-based solutions, overshadowing the offerings of other robust operating systems. FreeBSD, with its rich legacy and modern capabilities, provides an excellent alternative for virtualization enthusiasts and professionals alike. At the heart of FreeBSD’s virtualization capabilities is bhyve (BSD Hypervisor), a powerful yet streamlined hypervisor that capitalizes on the strengths of the FreeBSD ecosystem.

FreeBSD’s native hypervisor, bhyve, stands out for its tight integration with the operating system. Leveraging the full potential of FreeBSD’s underlying architecture, bhyve is designed to provide efficient virtualization while remaining relatively lightweight. One of the key benefits of running bhyve on FreeBSD is its seamless synergy with features like ZFS, PF (Packet Filter), and FreeBSD’s overall highly-regarded network stack. ZFS, in particular, enhances the bhyve experience by offering unparalleled performance, integrity checks, and advanced snapshot capabilities, all of which are critical in a virtualized environment. The combination of ZFS with bhyve allows administrators to confidently manage virtual machines (VMs) with high performance and built-in data protection.

Installing bhyve is a straightforward process, with FreeBSD providing all the necessary components and documentation. Whether you are new to virtualization or an experienced professional, getting started with bhyve is surprisingly quick. In fact, from a bare FreeBSD installation, you can have a VM up and running in under two minutes. This ease of deployment makes bhyve an attractive option for users seeking a minimalist yet capable virtualization solution.

To further simplify VM orchestration, FreeBSD supports a range of tools and interfaces for managing bhyve instances. Solutions like bhyve-vm provide a user-friendly way to control virtual machines, while more comprehensive systems like ClonOS (based on cbsd) extend bhyve into a full-fledged hypervisor distribution. ClonOS bundles web interfaces and additional utilities to streamline the management of VMs, making it ideal for users looking for an integrated approach. For those who prefer standalone web-based management, tools such as BVCP (bhyve-webadmin) offer an intuitive interface to administer VMs with minimal effort.

Despite its many advantages, bhyve is not without limitations. One of its most significant drawbacks lies in its lack of support for clustered deployments. Features like live migration, which allow for seamless VM transfers between hosts, remain immature and unsuitable for production environments. As of 2024, planned maintenance requiring VM downtime is still a reality when using bhyve, which can be a dealbreaker for businesses requiring high availability. For these reasons, bhyve is best suited for single-node setups where simplicity and FreeBSD’s unique advantages can shine. However, there’s still active development and cbsd remains the most promising solution for this.

In conclusion, bhyve is an excellent hypervisor for those seeking a FreeBSD-based solution. It provides exceptional performance, ease of use, and the reliability of FreeBSD’s ecosystem. While its limitations make it less appealing for complex clustered environments, bhyve remains a powerful tool for standalone virtualization needs, offering an efficient and flexible alternative to Linux-dominated solutions. Beside this, you can find here some more insights about the current live-migration integration.

If FreeBSD and bhyve sounds interesting to you, you can find some more impressions of it from me right here:

- bhyve on FreeBSD as a Hypervisor with a Web UI (based on BVCP)

- ClonOS – A FreeBSD based Distribution for Virtualization Environments

- HowTo: Managing VM on FreeBSD with bhyve and vm-bhyve

SUSE Harvester

SUSE Harvester is an innovative, open-source hyper-converged infrastructure (HCI) platform that not only simplifies on-premises operations but also provides a seamless pathway to a cloud-native future. Built on the robust foundation of Kubernetes, Harvester embodies a bold new approach to managing virtual and containerized workloads in a unified and scalable environment.

At its core, Harvester delivers a compelling proposition for organizations aiming to adopt cloud-like infrastructure within their data centers or edge locations. Traditional HCI platforms often come bundled with tightly integrated virtualization capabilities, making them powerful yet occasionally rigid in their functionality. Harvester, however, goes beyond these conventions. It not only accommodates traditional hypervisors but also fully embraces containerization, aligning itself with the needs of modern enterprises. This flexibility allows organizations to scale horizontally by simply adding nodes to their clusters – a process Harvester makes exceptionally straightforward. You can find more insights about it right here in one of my blog posts.

The architecture of Harvester is a testament to modern software engineering. It relies on SUSE Linux Enterprise Micro 5.3, an ultra-lightweight operating system tailored for containerized workloads. Kubernetes serves as the orchestration engine, ensuring scalability and resilience, while KubeVirt bridges the gap between virtual machines and containers, enabling seamless coexistence and migration of workloads. Finally, Longhorn, the distributed block storage system, provides reliable, highly available, and efficient persistent storage for Kubernetes clusters. Together, these components form an ecosystem optimized for both stability and innovation.

One of Harvester’s standout features is its integration with Rancher, SUSE’s renowned Kubernetes management platform. Through this integration, Harvester not only manages traditional virtual machines but also enables organizations to effortlessly deploy and orchestrate containerized applications. This dual capability positions it as an ideal choice for enterprises navigating the transition from monolithic architectures to microservices. Rancher acts as the interface that ties these components together, simplifying what could otherwise be a complex web of configurations and integrations.

Setting up Harvester is remarkably straightforward. Unlike other platforms that demand extensive configuration or steep learning curves, Harvester offers a streamlined deployment experience. Adding new nodes to an existing cluster is equally seamless, ensuring that scaling operations do not disrupt ongoing workloads. This ease of use is further amplified by the platform’s support for ARM64 architectures, opening up opportunities to leverage energy-efficient processors like those built on Ampere’s architecture – a feature particularly advantageous for organizations operating at scale or within power-constrained environments.

However, Harvester is not without its limitations. Its storage backend is currently tied exclusively to Longhorn, which, while robust and feature-rich, may not suit every use case. Longhorn ensures data redundancy across nodes, supports snapshots and backups, and protects against hardware failures, making it a reliable choice for many Kubernetes users. Still, the lack of external storage backend options is a notable drawback. The upcoming version 1.3, anticipated in the first quarter of 2025, is expected to address this limitation by introducing support for external storage backends for root VMs. Until then, Harvester is best suited for environments comfortable with Longhorn as their primary storage solution.

A unique advantage of Harvester is its compatibility with a GitOps approach to infrastructure management. By embracing GitOps, teams can achieve declarative, version-controlled, and automated deployment of their clusters. This methodology not only enhances operational efficiency but also aligns Harvester with modern DevOps practices, further underscoring its role as a forward-thinking platform.

Harvester’s ability to unify diverse workloads across centralized data centers and remote edge locations makes it an appealing choice for a wide range of use cases. Its reliance on Kubernetes ensures that it is well-suited for organizations already familiar with container orchestration, while its ease of deployment lowers the barrier for newcomers to the cloud-native paradigm. The current limitation with storage backends might dissuade some potential users, but the promise of future flexibility keeps Harvester in the spotlight for progressive IT teams.

In conclusion, SUSE Harvester is more than just an HCI platform – it is a bridge between the legacy and the future, offering a cloud-native approach to infrastructure management. For organizations willing to embrace its Longhorn-centric storage system, it represents a compelling choice, delivering a unified, scalable, and innovative infrastructure experience. With its strong foundation and roadmap for improvement, Harvester is poised to play a significant role in shaping the next era of enterprise computing.

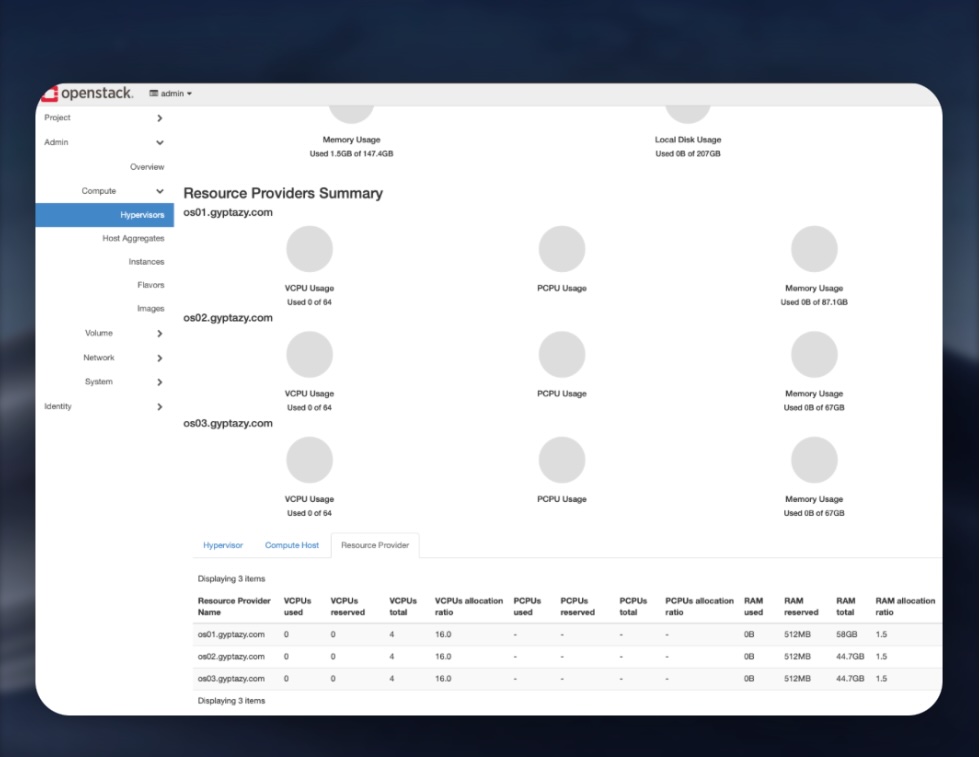

OpenStack

OpenStack is probably the richest and most feature-complete solutions in the cloud infrastructure space, yet it is also among the most complex. It is a modular platform designed to serve as an Infrastructure-as-a-Service (IaaS) solution, enabling users to deploy and manage large-scale cloud environments. This modularity makes OpenStack highly customizable and flexible but also demands significant expertise to implement and maintain effectively.

At its core, OpenStack is a collection of services, each tailored to specific aspects of cloud infrastructure. While there are many optional components that can extend its capabilities, seven core services form the foundation of any functional OpenStack instance:

Horizon:

The graphical user interface for OpenStack, Horizon provides an intuitive dashboard for end-users to manage resources, configure instances, and interact with other OpenStack components.

Nova:

The compute service, Nova, is at the heart of OpenStack’s ability to manage virtualized computing resources. It allows users to create and manage virtual machines (VMs), adjust their configurations dynamically, and interact seamlessly with the underlying hypervisors.

Neutron:

Responsible for OpenStack’s networking capabilities, Neutron manages the virtual network infrastructure. It facilitates communication between instances, supports the creation of virtual networks, and enables advanced networking features like VPNs and firewalls.

Glance:

This service handles the management of software images, or virtual disk snapshots, that are used to deploy VMs. Glance organizes these images into a library, simplifying the process of setting up virtual machines for various tasks.

Keystone:

Acting as the authentication and authorization layer, Keystone manages access control in the cloud. It governs user authentication and resource permissions across other OpenStack components.

Cinder:

OpenStack’s block storage solution, Cinder, provides persistent storage akin to a traditional hard drive. It’s ideal for performance-intensive workloads, making it a popular choice for large data operations.

Swift:

This object storage system supports distributed data storage, enabling the handling of unstructured data across multiple drives. Swift’s built-in deduplication optimizes storage space and ensures high availability.

One of OpenStack’s defining features is its modular architecture and as we could already see by the core components, this can gain to a lot of components – including all the advantages but also disadvantages. Organizations can tailor their deployments to include only the components they need, which makes each OpenStack instance unique. This flexibility allows organizations to start small and scale up as their needs grow, adding new services like advanced storage or networking capabilities over time. OpenStack also integrates with a wide array of hypervisors, storage systems, and networking technologies, creating a broad ecosystem that promotes interoperability and reduces vendor lock-in.

However, this modularity comes at a cost. Each deployment’s uniqueness can complicate debugging and maintenance, particularly for organizations without a dedicated OpenStack team. The learning curve is steep, requiring significant time and resources to train personnel or hire skilled professionals. Automated deployment tools like Packstack can simplify the setup process for small-scale test environments, but they are rarely sufficient for production-level implementations.

OpenStack shines in large-scale and enterprise-grade deployments, particularly in scenarios demanding high-performance computing (HPC). Applications in artificial intelligence (AI), big data analytics, and complex simulations are well-suited for OpenStack’s robust infrastructure. Its scalability and customization make it an attractive choice for organizations looking to build geo-redundant, highly available cloud clusters.

That said, OpenStack is less ideal for small to medium-sized setups. The cost and effort involved in setting up and maintaining an OpenStack environment can outweigh the benefits for smaller organizations. It is best suited for enterprises that can invest in dedicated teams to manage the system and leverage its full potential. Even with automation tools, ongoing maintenance, updates, and security patches remain labor-intensive, often necessitating external consulting services during the initial setup phase.

OpenStack’s open-source nature brings both opportunities and challenges. While the absence of vendor lock-in and the support of a global developer community foster innovation, they also create fragmentation. Differences in how components are implemented or supported can lead to inconsistent experiences. Additionally, the lack of a central authority overseeing its development roadmap can introduce uncertainty about future features and updates. Organizations must be prepared to adapt to community-driven changes and navigate the interdependencies of OpenStack’s components, which can complicate troubleshooting and upgrades.

For organizations seeking a highly flexible, scalable, and vendor-neutral cloud platform, OpenStack is unparalleled. Its support for diverse hardware and software platforms and its broad ecosystem make it an excellent choice for enterprises with complex, large-scale requirements. However, the steep learning curve and resource demands mean that OpenStack is not a casual choice. A dedicated team is essential for managing the system, ensuring security, and optimizing performance.

In summary, OpenStack is a powerful tool for those who can harness its capabilities. With the right investment in expertise and infrastructure, it offers unmatched flexibility and scalability, making it a cornerstone of modern cloud computing for large-scale operations. For those willing to navigate its complexities, OpenStack delivers an infrastructure platform that is both future-proof and highly adaptable but maybe Open Nebula might be an alternative?

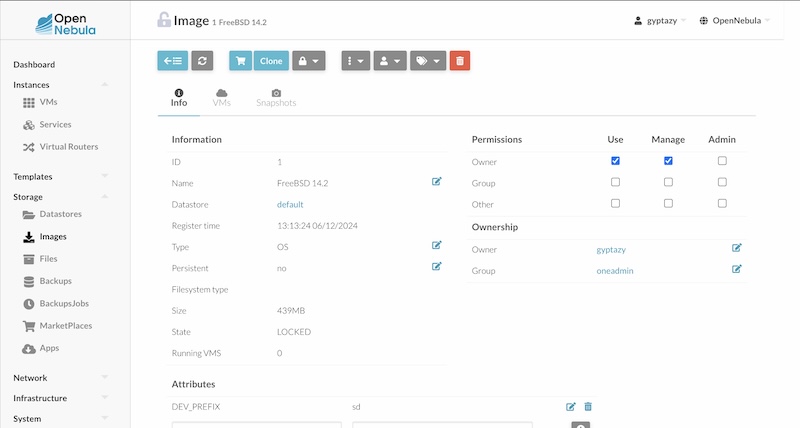

Open Nebula

OpenNebula is a robust, open-source platform for managing virtualized data centers and private clouds. It was first released to the public in 2008, but its origins trace back further as an academic research project. The creators transitioned from research to enterprise-level services in 2010 by founding OpenNebula Systems. This company supports the continued development of OpenNebula while offering professional assistance to users. As of late 2024, OpenNebula is at version 6.10, with its long-term support (LTS) version at 6.4.6, reflecting its ongoing commitment to stability and innovation.

OpenNebula’s primary focus is to simplify virtualization management by providing a streamlined interface for deploying and managing virtual machines (VMs) and containers. Its core architecture employs a single-server design, meaning a single instance handles all resource and service management. This simplicity contrasts with the distributed, multi-server architecture of its competitor, OpenStack. While OpenStack emphasizes scalability for enterprise-grade infrastructure, OpenNebula appeals to users seeking straightforward deployment and operation.

A standout feature of OpenNebula is its default support for the KVM hypervisor and LXC system containers. Users can also extend its functionality to manage other hypervisors, such as VMware and Xen or XCP-ng. Integrating these additional hypervisors requires specific drivers or third-party add-ons. For VMware, OpenNebula manages resources side-by-side with a running VMware vCenter deployment, enabling seamless management of VMware hosts and guest VMs. However, support for Xen or XCP-ng relies on an outdated third-party add-on, limiting its compatibility with current OpenNebula versions. This limitation underscores OpenNebula’s reliance on community contributions for extending its capabilities beyond the core offering.

One of OpenNebula’s notable strengths lies in its user-friendly networking model. It allows users to create and manage virtual networks with ease. This simplicity, however, can be a drawback for users who require advanced features like software-defined networking (SDN) or network function virtualization (NFV), both of which are better supported by OpenStack. Similarly, while OpenNebula integrates with container technologies like Docker, it does not offer the advanced orchestration features provided by dedicated container platforms like Kubernetes.

The installation process for OpenNebula is another highlight, offering a hassle-free experience through tools like miniOne. This tool enables users to quickly set up a functioning environment, including both the frontend and optional components like KVM or LXC. In contrast, OpenStack’s installation demands significant expertise and often involves configuring multiple nodes and understanding a more complex ecosystem – even with Packstack which already simplifies the installation.

Despite its advantages, OpenNebula is not without drawbacks. Its XML-RPC API and frontend APIs have room for improvement, particularly in handling high volumes of requests. Furthermore, while the platform excels in small to medium-sized deployments, it may struggle with scalability in large enterprise environments. The community around OpenNebula, while dedicated, is smaller compared to those of OpenStack, Kubernetes or Proxmox. This can limit the availability of plugins, integrations, and extensions, although the project’s emphasis on stability and simplicity often compensates for these shortcomings.

OpenNebula carves out its niche by offering a reliable and straightforward solution for managing virtualization resources. Its focus on ease of use and integration with established technologies makes it a compelling choice for organizations that value simplicity and stability over the expansive but complex feature sets of competitors like OpenStack. However, it remains more suited for small to mid-scale environments, where its limitations in scalability and networking features are less impactful. For users seeking a quick start, tools like miniOne make exploring OpenNebula an accessible and rewarding experience.

Other Solutions

The virtualization market is teeming with alternatives that cater to a broad spectrum of organizational needs. These options range from open-source platforms like oVirt and Apache CloudStack to proprietary solutions such as Microsoft Hyper-V and Nutanix AHV. Each comes with unique strengths, weaknesses, and use cases, making the decision-making process as much about aligning with business goals as about technical compatibility.

Open-source solutions are gaining traction as organizations seek cost-effective and transparent alternatives to commercial offerings. oVirt, a KVM-based platform, delivers enterprise-grade virtualization with robust management tools. Apache CloudStack, on the other hand, focuses on building Infrastructure-as-a-Service (IaaS) environments with advanced networking and multi-hypervisor support. These open-source platforms are supported by vibrant communities and offer flexibility, but they require technical expertise and are often more complex to set up compared to their proprietary counterparts.

On the proprietary side, Microsoft Hyper-V stands out for its seamless integration with Windows ecosystems, making it a logical choice for businesses heavily invested in Microsoft technologies. Nutanix AHV simplifies IT operations through hyper-converged infrastructure and a focus on one-click management, though its ecosystem-centric approach may deter those seeking a more modular system.

The broader virtualization landscape also includes names like Citrix XenServer, Red Hat Virtualization, and the currently most known Proxmox VE, each addressing specific use cases and preferences. These solutions further diversify the market, ensuring businesses have a plethora of options beyond dominant players like VMware.

Rethinking Commercial Projects Post-Broadcom Acquisition

The Broadcom acquisition of VMware has introduced an element of uncertainty into the market. Businesses reliant on VMware are considering the implications of potential changes in pricing models, support policies, and product roadmaps. This acquisition serves as a cautionary tale about the risks of vendor lock-in and has prompted renewed interest in open-source platforms as a hedge against unpredictable shifts in commercial strategies. It might be more important than ever to consider free and open source based solutions when switching.

Conclusion

The virtualization market today offers an impressive array of free and open-source solutions, providing flexibility and innovation to those looking to virtualize their workloads. From tools like Proxmox and OpenStack to lesser-known options like bhyve in FreeBSD, there’s a solution for nearly every scenario. However, it is crucial to understand that no open-source product currently serves as a 1:1 replacement for VMware, the long-standing leader in enterprise-grade virtualization. While free and open-source alternatives shine in many areas, they bring unique limitations and advantages that must be carefully weighed against your specific needs.

Before embarking on the journey to adopt or switch virtualization platforms, you must validate your requirements thoroughly. Each business or project has distinct priorities in performance, scalability, ease of management, or cost-effectiveness and no single solution is universally the best. Without a detailed evaluation, it’s impossible to claim one product is inherently better than another. This is especially true as all prominent virtualization tools are the result of years of engineering excellence, each designed to excel in different areas. The decision isn’t about finding the “best” product but about finding the right fit for your use case.

Proxmox, for instance, has gained immense popularity in recent years, thanks to its open-source ethos, ease of use, and the integration of containerization with KVM-based virtualization. However, it’s not a silver bullet. Proxmox does not suit every scenario, even though some advocates might argue otherwise. The platform’s feature set, while impressive, doesn’t always align with the needs of enterprise-level deployments or highly customized virtualization environments. It’s a powerful tool, but like any tool, it performs best when applied to the right task.

Similarly, OpenStack is often touted as a robust and versatile solution for those looking to build large-scale cloud infrastructures. Its flexibility and scalability are unparalleled, but that comes at a cost of its complexity. Deploying OpenStack requires a team with specialized knowledge and a commitment to maintaining the system over time. Training, troubleshooting, and ongoing support can consume significant resources, making it an impractical choice for smaller clusters or less experienced teams. OpenStack should only be considered when the scale of your operation justifies the investment in expertise and infrastructure. For small to medium-scale setups, simpler solutions often prove more cost-effective and manageable.

On the other end of the spectrum lies bhyve, the hypervisor native to FreeBSD. It’s a compelling option for certain use cases, particularly for single-node setups where simplicity and FreeBSD’s ecosystem are critical. However, bhyve’s lack of robust support for live migrations – a feature that is essential for modern enterprise environments – limits its applicability in larger deployments. While it may shine in specific scenarios, bhyve might not be well-suited for businesses that rely on high availability and seamless workload migration. But this hopefully changes in the near future.

In conclusion, the vibrant and diverse ecosystem of virtualization tools offers something for everyone, but it demands careful consideration. Each product excels in different areas and serves different audiences. The key to success lies in understanding your own requirements and evaluating potential solutions with an open mind. While open-source virtualization platforms democratize access to cutting-edge technology, they must be selected with a clear strategy in mind. There is no universal “right” or “wrong” choice – only the choice that best aligns with your goals and constraints.